# Good practice to only deal with the highest level directory you specify (instead of downloading all of `` you're just mirroring from `.vim` The easiest way I've found to get all files, provided nothing is hidden behind a non-public directory, is using the mirror command.

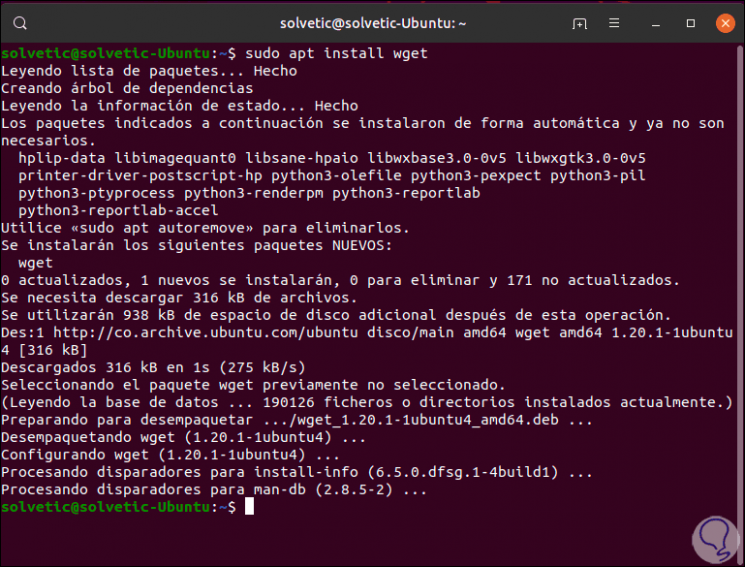

If you've done everything above correctly, you should be fine here. If it does, you need to instruct wget to ignore it using the following option in your wget command by adding: wget -e robots=off ''Īdditionally, wget must be instructed to convert links into downloaded files. Respect robots.txtĮnsure that if you have a /robots.txt file in your public_html, www, or configs directory it does not prevent crawling. Just a few considerations to make sure you're able to download the file properly. While wget has some interesting FTP and SFTP uses, a simple mirror should work. It sounds like you're trying to get a mirror of your file.

WGET RECURSIVE FULL

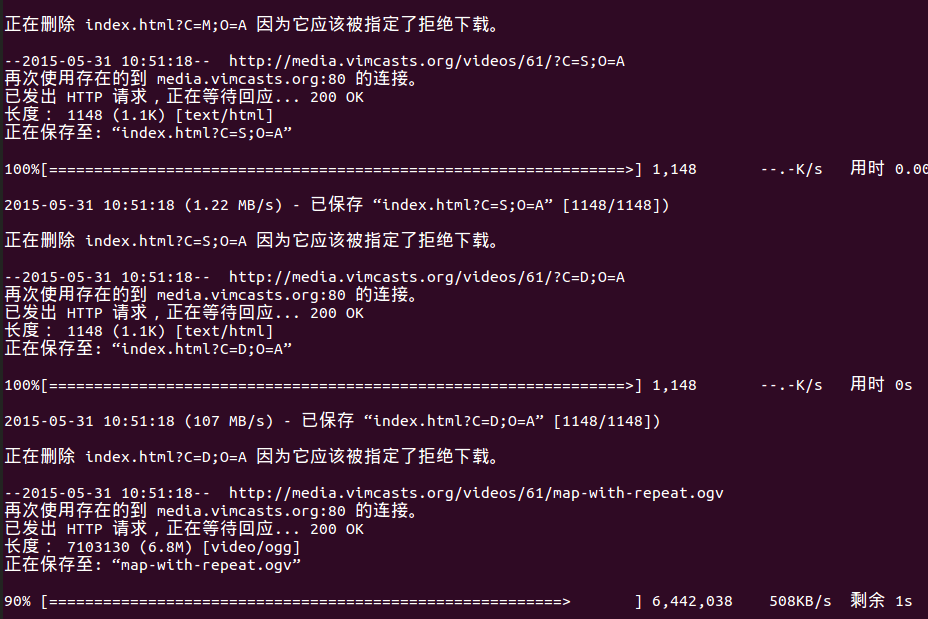

Please note that the -convert-links option kicks in only after the full crawl was completed.Īlso, if you are trying to wget a website that may go down soon, you should get in touch with the ArchiveTeam and ask them to add your website to their ArchiveBot queue.

via python3 -m rver in the dir you just wget'ed) to run JS may be necessary. Here is my "ultimate" wget script to download a website recursively: wget -recursive $ \Īfterwards, stripping the query params from URLs like main.css?crc=12324567 and running a local server (e.g. First of all, thanks to everyone who posted their answers.

0 kommentar(er)

0 kommentar(er)